On the last day of OpenAI's 12-day event, OpenAI has unveiled its latest AI models, O3 and O3-mini, marking a significant advancement from the previous O1 "reasoning" model.

These models aim to enhance reasoning capabilities and approach the boundaries of AGI under specific conditions.

Currently available for safety testing, these models are set for a broader release in early 2025.

What Is OpenAI O3?

The O3 model family, including the more compact O3-mini, is engineered to tackle complex tasks in coding, mathematics, and general intelligence.

OpenAI has introduced "deliberative alignment" to improve safety and reliability, allowing the models to reason through tasks using a "private chain of thought."

Comparion Between O3 & O1

The O3 model represents a significant evolution from the O1 model, primarily through its advanced reasoning abilities.

While O1 laid the groundwork for reasoning tasks, O3 has been designed to handle more complex problems with greater accuracy and efficiency.

It achieves this by incorporating a "private chain of thought," allowing the model to plan and reason through tasks more effectively.

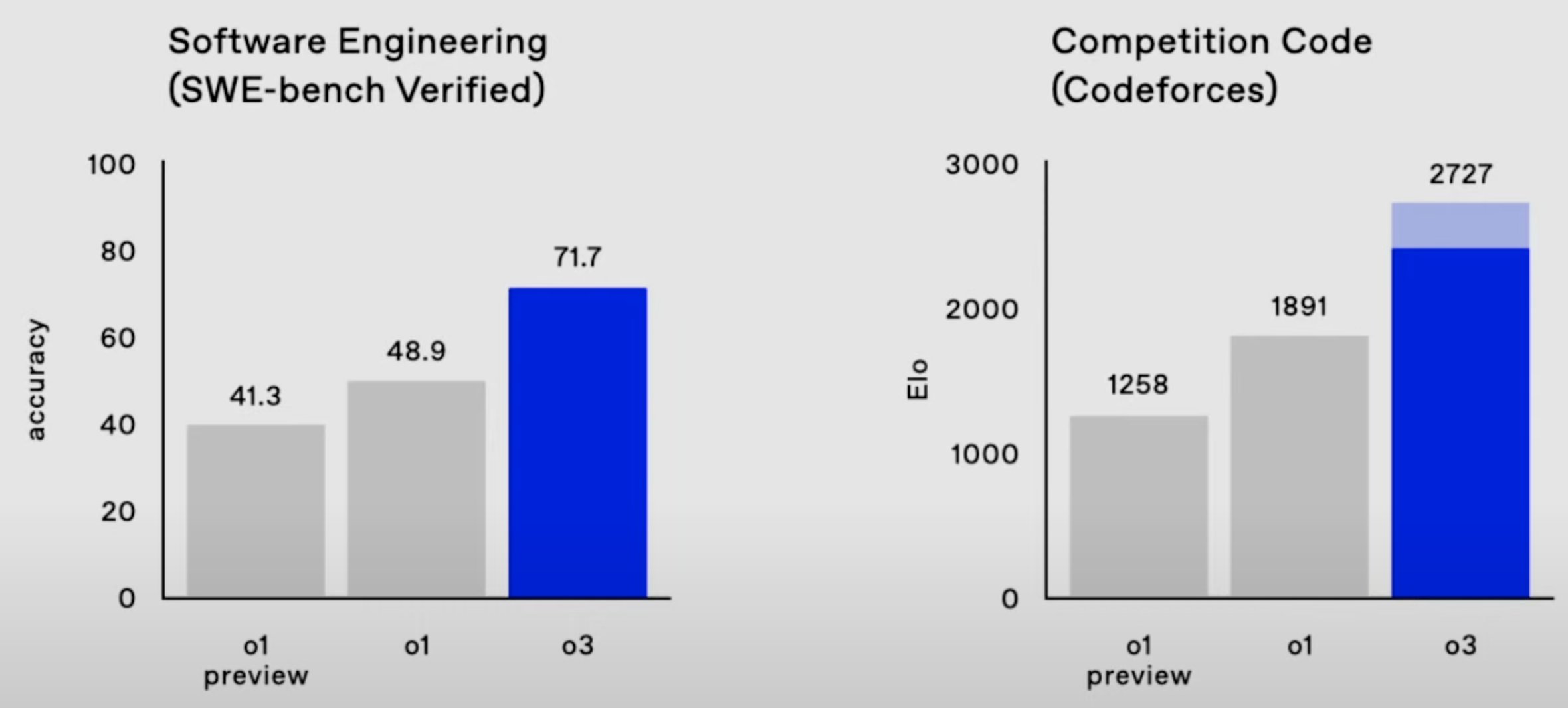

Coding Performance

(Source: OpenAI)

In coding tasks, O3 demonstrates a marked improvement over O1.

On the SWE-Bench Verified benchmark, which assesses real-world software tasks, O3 achieves a 71.7% accuracy rate, surpassing O1's performance.

Additionally, in competitive programming, O3 attains an ELO score of 2727, significantly higher than O1's score of 1891. This indicates O3's superior ability to tackle complex coding challenges.

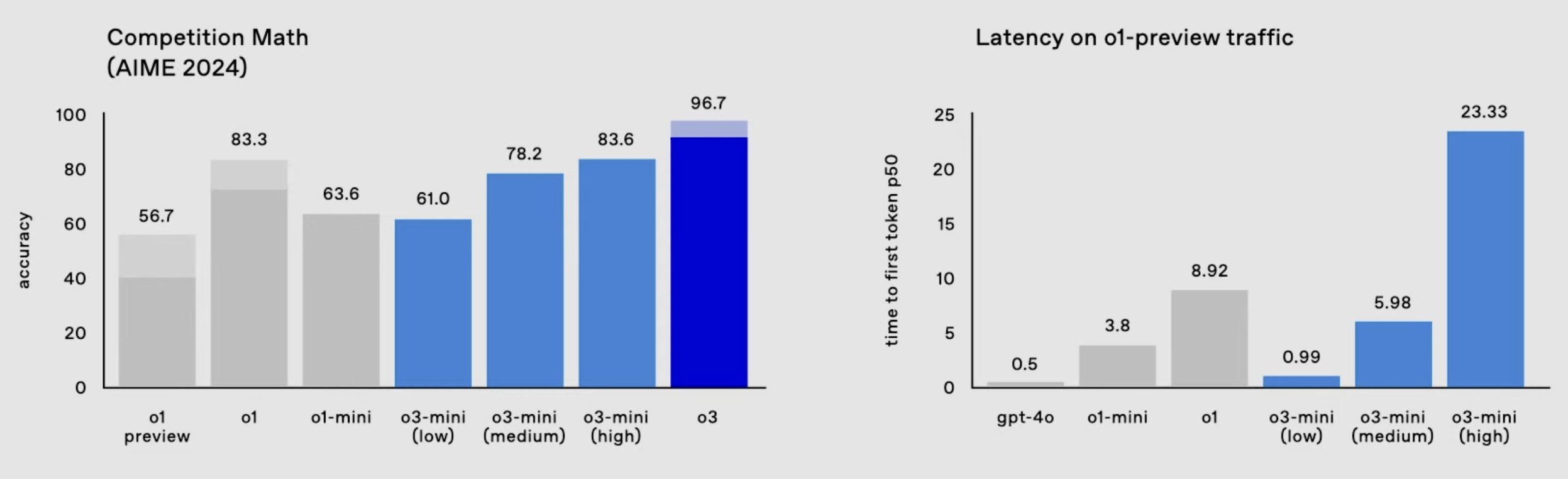

Mathematical Reasoning Performance

(Source: OpenAI)

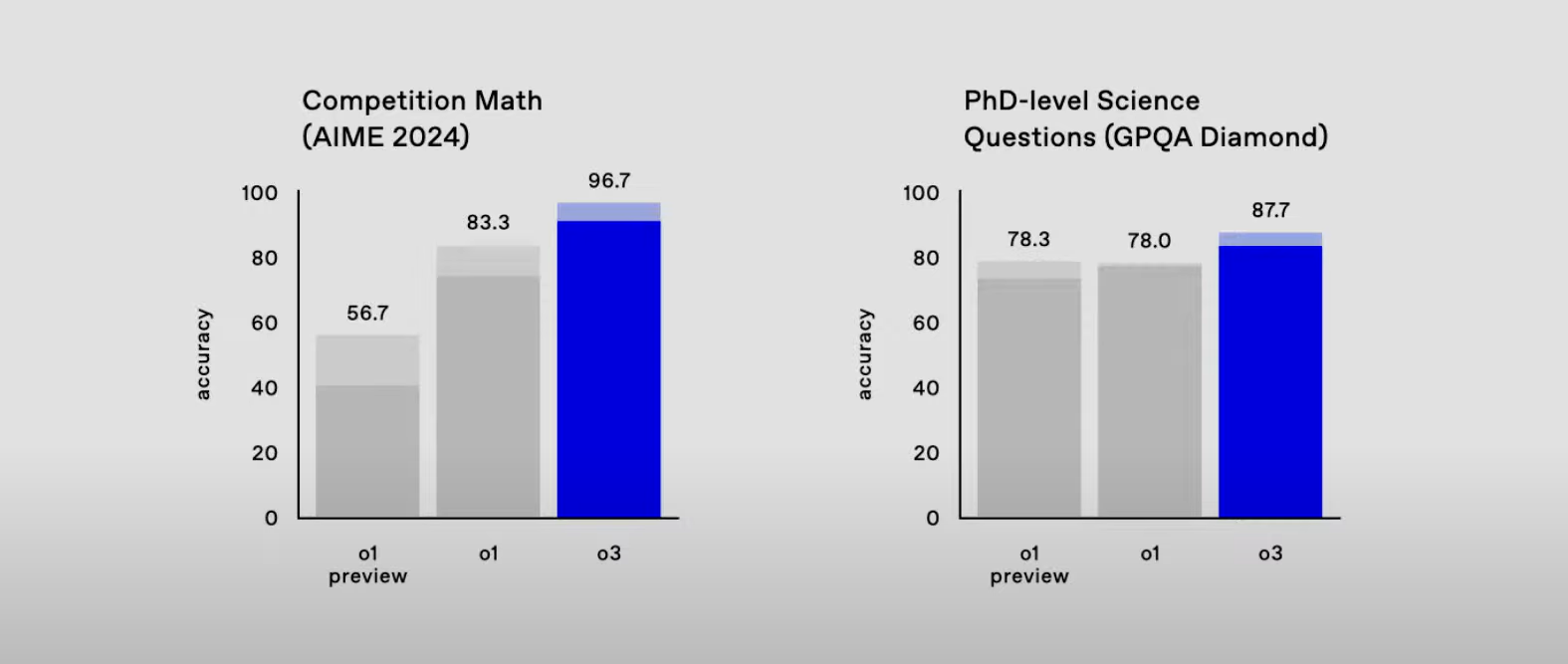

O3 also excels in mathematical reasoning compared to O1.

On the American Invitational Mathematics Exam (AIME) 2024, O3 scores an impressive 96.7%, missing only one question, whereas O1 scores 83.3%.

This improvement suggests that O3 is better equipped to handle intricate mathematical problems, moving closer to human-level performance in this domain.

General Science Performance

(Source: OpenAI)

In the realm of general science and intelligence, O3 outperforms O1 on assessments like GPQA Diamond, which involves graduate-level science questions.

O3 achieves an accuracy of 87.7%, compared to O1's 78%. This demonstrates O3's enhanced capability in solving technically demanding problems across various scientific disciplines.

Reasoning and Safety Features

O3 introduces the concept of adjustable reasoning time, allowing users to tailor the model's "thinking time" based on task complexity, a feature not present in O1.

Moreover, O3 employs "deliberative alignment" for improved safety, enabling the model to dynamically assess prompts and identify potential risks more effectively than O1.

In summary, O3 offers significant advancements over O1 in coding, mathematics, and general intelligence, along with enhanced reasoning and safety features, making it a more powerful and versatile tool for complex problem-solving tasks.

O3 Release Date and Availability

O3 and O3-mini are currently available for safety testing, with O3-mini expected to launch by the end of January 2025, followed by O3. This cautious rollout underscores OpenAI's commitment to responsible AI deployment.

Editor's Comments

The O3 and O3-mini models represent a significant leap in AI reasoning capabilities, with promising benchmark results.

However, their real-world application remains to be evaluated.

OpenAI's phased release and focus on safety testing reflect the challenges of deploying advanced AI technologies responsibly.

These models may be pivotal in the journey toward achieving AGI, setting the stage for future innovations.