Google Research and DeepMind have launched VaultGemma, a 1-billion-parameter AI model designed with differential privacy (DP) to protect sensitive data while maintaining robust performance.

This open-source breakthrough addresses growing concerns about data leakage in large language models (LLMs), offering a secure solution for industries like healthcare and finance.

A New Era for Privacy-Focused AI

VaultGemma, built on Google's Gemma 2 architecture, integrates differential privacy from the ground up, ensuring training data remains secure.

Unlike traditional LLMs, which risk exposing sensitive information through adversarial prompts, VaultGemma's design prevents memorization, making it a game-changer for ethical AI development.

What Makes VaultGemma Unique?

VaultGemma is a decoder-only transformer with 26 layers and Multi-Query Attention, pretrained on the same data as Gemma 2.

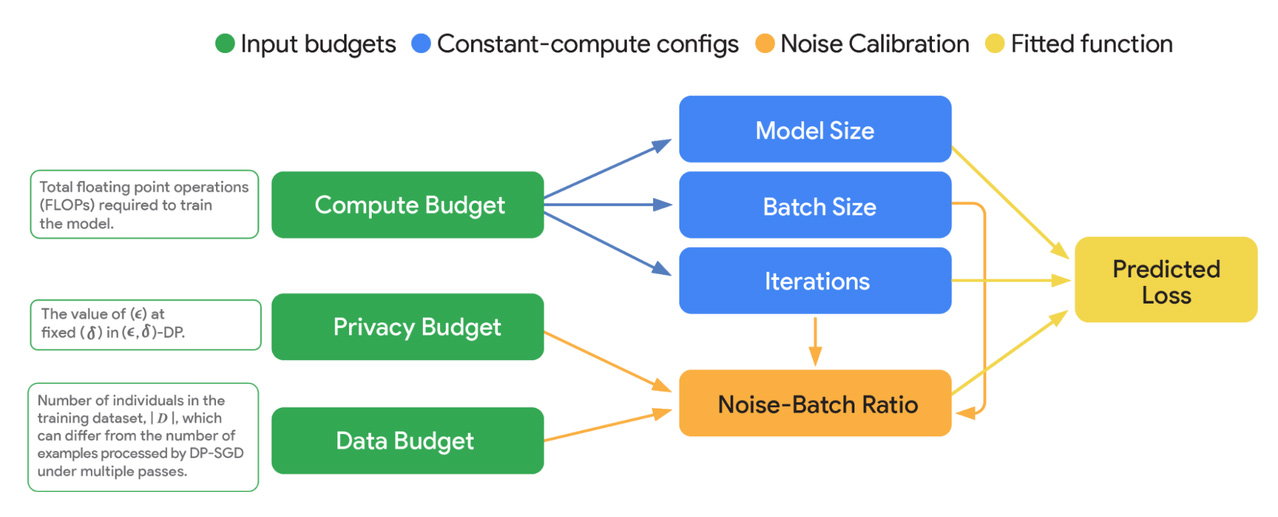

Its differential privacy is achieved through Differentially Private Stochastic Gradient Descent (DP-SGD), which adds noise via gradient clipping and Gaussian mechanisms.

This ensures a privacy guarantee (ε ≤ 2.0, δ ≤ 1.1e−10 for sequences up to 1,024 tokens), meaning outputs are statistically indistinguishable regardless of specific data inclusion.

Trained on Google's TPUv6e hardware using JAX and ML Pathways, it employs optimizations like vectorized clipping and large batch sizes to maintain efficiency despite DP's computational demands.

Performance and Privacy: Striking a Balance

While differential privacy introduces a performance trade-off, VaultGemma performs comparably to non-private models like GPT-2 (1.5B parameters).

Benchmarks show it achieves ~45% on MMLU reasoning tasks, slightly below Gemma 3 1B’s 52%, but with no detectable data leakage—a critical advantage for sensitive applications.

Benchmark Insights

Google's research, "Scaling Laws for Differentially Private Language Models," outlines how compute, privacy budgets, and model size impact performance.

VaultGemma rivals older non-DP models on tasks like Big-Bench Hard, but the utility gap persists.

Ongoing advancements in DP techniques could further close this gap, enhancing its versatility.

Real-World Applications and Accessibility

VaultGemma's privacy-first design makes it ideal for regulated sectors.

In healthcare, it could analyze patient data securely; in finance, it could enhance fraud detection without compromising user information.

Available on platforms like Hugging Face and Kaggle, it supports GPU, TPU, and CPU usage, though resource demands may challenge smaller systems like Google Colab's free tier.

Community and Industry Impact

Industry leaders praise VaultGemma as a "secure AI revolution," particularly for its open-source availability.

Social media discussions highlight enthusiasm for its potential in regulated industries, though some users note hardware limitations.

Its release underscores Google's commitment to responsible AI, setting a benchmark for privacy-preserving models.

Editor's Comments

VaultGemma represents a pivotal step toward ethical AI, addressing a critical gap in data privacy that has long plagued LLMs.

Its open-source nature democratizes access to secure AI, fostering innovation in sensitive domains.

However, the performance trade-off highlights a broader challenge: balancing privacy with utility.

As DP techniques evolve, expect future models to narrow this gap, potentially reshaping AI deployment in regulated sectors.

Google's transparency in sharing technical details and weights sets a high standard, but widespread adoption may hinge on optimizing resource demands for smaller developers.

This launch could spur competitors to prioritize privacy, accelerating a race toward safer AI systems.

FAQs

What is VaultGemma?

VaultGemma is a 1-billion-parameter AI model by Google, designed with differential privacy to protect training data while maintaining performance.

How does differential privacy work in VaultGemma?

It uses DP-SGD, adding noise via gradient clipping and Gaussian mechanisms to ensure outputs don't reveal specific training data.

How does VaultGemma compare to other AI models?

It matches older non-DP models like GPT-2 but lags slightly behind non-private Gemma 3 1B, with the benefit of zero data leakage.

Where can developers access VaultGemma?

It's available on Hugging Face and Kaggle, supporting GPU, TPU, and CPU environments.

What are VaultGemma's applications?

It's ideal for secure data analysis in healthcare, finance, and education, where privacy is critical.

Why is there a performance gap?

Differential privacy adds noise, slightly reducing accuracy compared to non-private models, though optimizations are narrowing this gap.

Can VaultGemma run on free platforms like Google Colab?

Its resource demands may exceed free-tier capabilities, requiring robust hardware like TPUs or high-end GPUs.

How does VaultGemma impact AI ethics?

It sets a standard for privacy-first AI, addressing concerns about data leakage and enabling safer deployment in regulated industries.