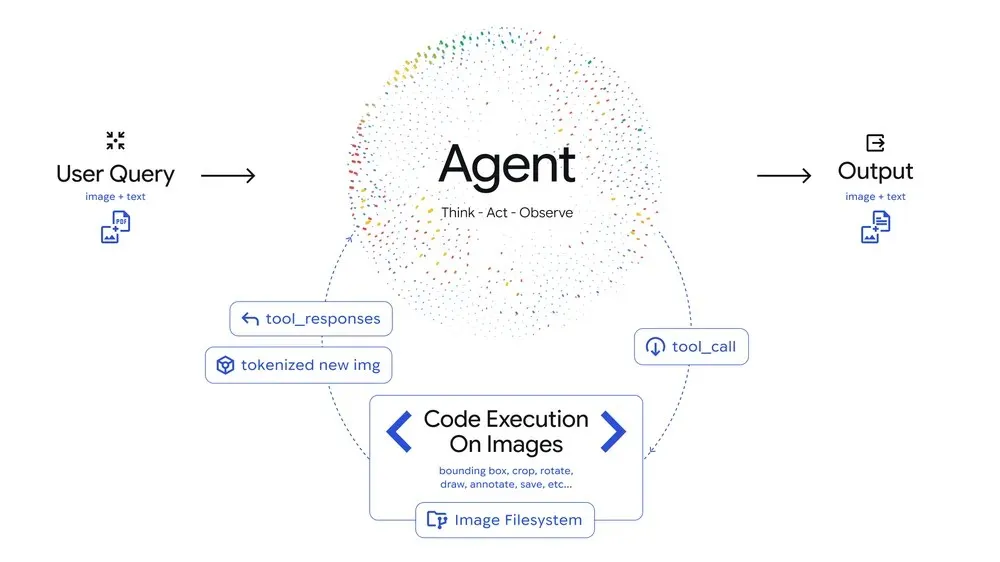

Google just leveled up its Gemini 3 Flash AI. The new Agentic Vision feature lets the model actively reason about images instead of just describing them. It can even run Python code to manipulate visuals, making outputs more accurate and useful for real-world tasks.

Image Credits:TestingCatalog

AI That Actually Thinks About What It Sees

From Static Descriptions to Smart Reasoning

Gone are the days when AI just labeled an image and called it a day. With Agentic Vision, Gemini 3 Flash takes a step-by-step approach: zooming, annotating, cropping, and reasoning before producing an answer. It’s like giving the AI a brain to double-check what it sees.

Code-Powered Visual Intelligence

The twist? Gemini 3 Flash can execute Python code on the fly. It can draw boxes, highlight details, parse data tables, and even generate charts from images. This makes outputs more reliable, cutting down on guesswork and boosting accuracy by 5–10% on vision tasks.

Easy Access for Developers and Apps

Image Credits:TestingCatalog

Rollout Across Google Tools

Agentic Vision is available now via Gemini API in Google AI Studio and Vertex AI, with the Gemini app getting the upgrade soon. Developers can integrate it into apps to deliver smarter image-based features and richer visual experiences.

Why It Matters for Apps and ASO

For app developers and marketers, smarter AI means better image recognition, photo tagging, and visual analytics. This can help apps stand out in crowded app stores, drive engagement, and improve key ASO metrics.

Looking Ahead

More Tools, Smarter AI

Google plans to expand Agentic Vision to more models and add features like automatic image adjustments and web search grounding. The future? AI that not only sees but also acts intelligently on visual data.

Comments

Agentic Vision shows how AI is moving from static answers to interactive reasoning. Apps that leverage this can create standout features, from image-based search to smarter analytics, giving them an edge in competitive markets.