On March 25, 2025, Google DeepMind introduced Gemini 2.5 Pro, an advanced AI model designed to tackle complex reasoning, science, and coding tasks.

As the latest iteration in the Gemini series, this model builds upon its predecessor, Gemini 2.0 Pro, with significant improvements in performance benchmarks.

Pricing and Availability

Gemini 2.5 Pro is available through the Gemini Advanced subscription, with developer pricing to be announced soon.

For individual users, Gemini 2.5 Pro is accessible through the Gemini Advanced subscription, which costs $19.99 per month (Gemini Advanced).

Developers can access it via Google AI Studio, with pricing details to be introduced in the coming weeks, and it will also be available on Vertex AI soon.

Key Features and Enhancements

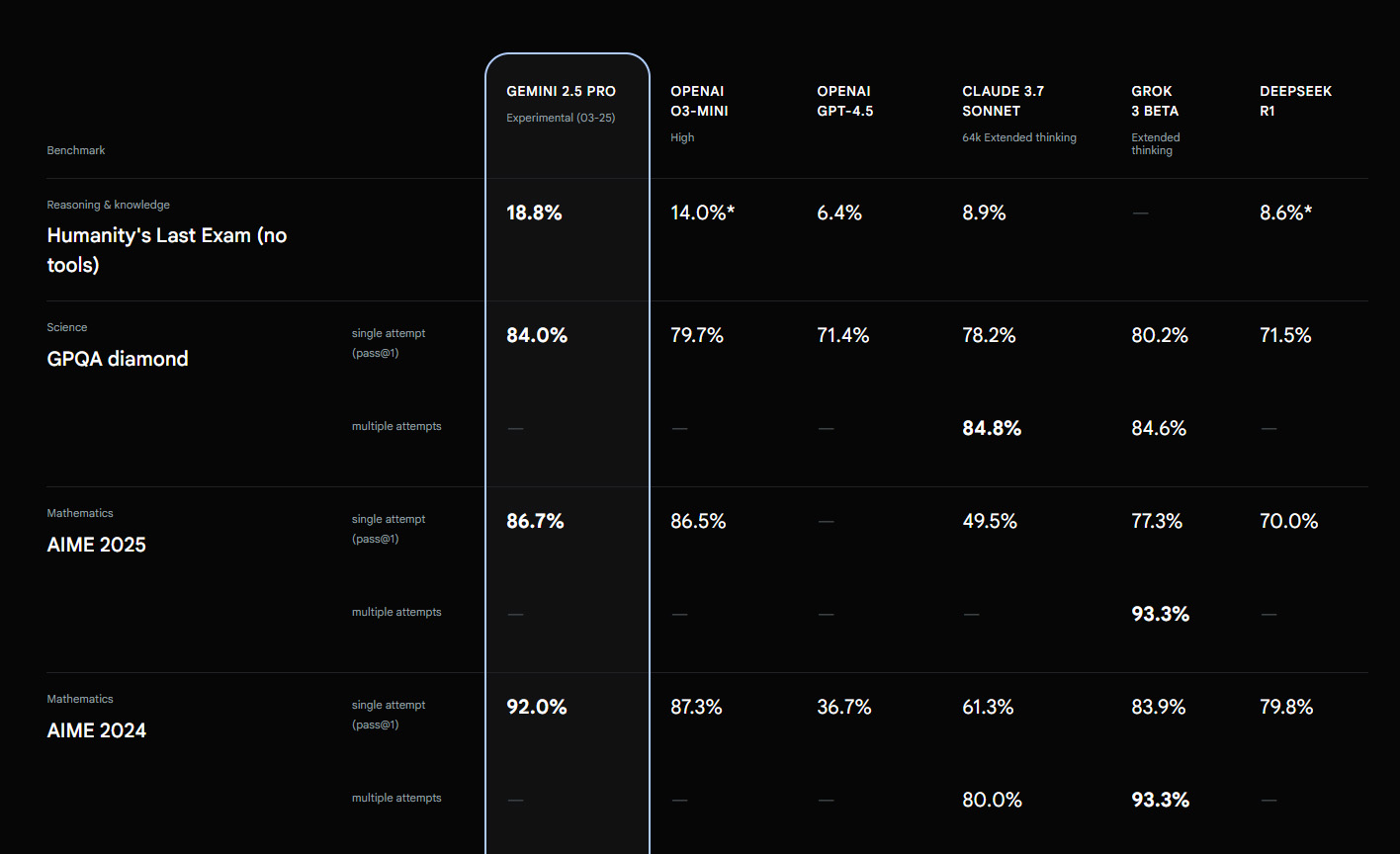

Gemini 2.5 Pro excels in tasks requiring deep reasoning and scientific knowledge. Its benchmark scores indicate a notable improvement over previous models:

(Source: Google)

Superior Reasoning and Scientific Capabilities

- Humanity's Last Exam (Reasoning & Knowledge): 18.8%, significantly outperforming OpenAI’s GPT-4.5 (6.4%).

- GPQA Diamond (Scientific Reasoning): 84.0%, surpassing GPT-4.5 (79.7%).

- AIME 2024 (Mathematics): 92.0%, a substantial increase from Gemini 2.0 Pro's 72%.

These results suggest that Gemini 2.5 Pro is particularly suited for applications requiring logical deduction, scientific analysis, and mathematical problem-solving.

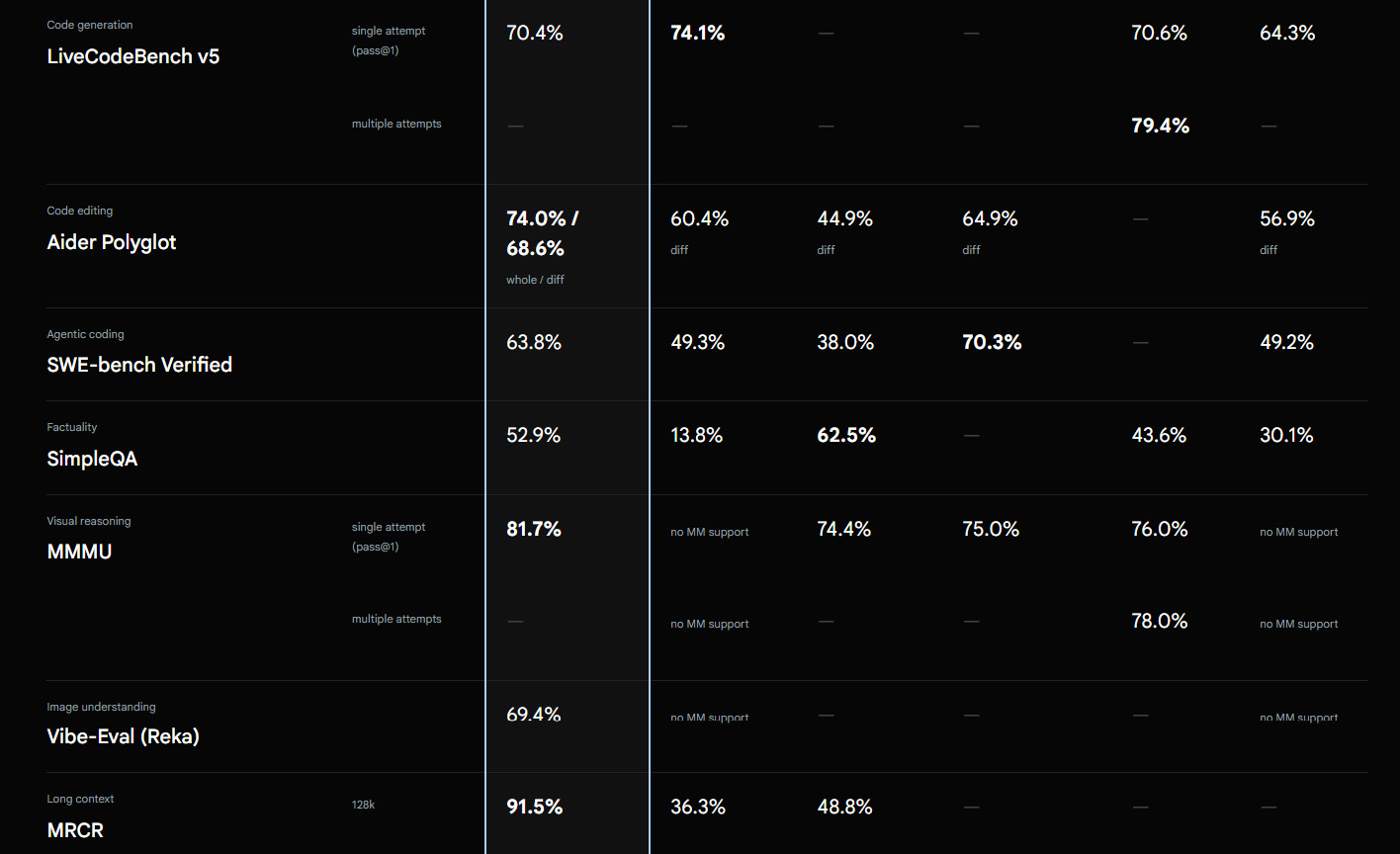

Advanced Coding and Multimodal Capabilities

The model demonstrates strong coding performance, though it remains competitive rather than dominant in some areas:

- SWE-bench Verified (Agentic Coding): 63.8%, trailing behind Claude 3.7 Sonnet (70.3%).

Additionally, Gemini 2.5 Pro supports multimodal inputs and outputs, allowing it to generate animations, simulations, and interactive applications from simple prompts.

Expanded Context Window for Processing Large Data

One of the standout features of Gemini 2.5 Pro is its massive context window of 1 million tokens, with plans to expand to 2 million tokens.

This makes it particularly useful for processing extensive datasets, long-form documents, and complex problem-solving scenarios without losing context.

Comparison with Other Leading AI Models

Outperforming GPT-4.5, Competing with Claude 3.7 Sonnet

Benchmark comparisons indicate that Gemini 2.5 Pro outperforms OpenAI's GPT-4.5 in reasoning and science while competing closely with Claude 3.7 Sonnet in coding tasks:

| Gemini 2.5 Pro | GPT-4.5 | Claude 3.7 Sonnet | |

|---|---|---|---|

| Humanity's Last Exam (Reasoning) | 18.8% | 6.4% | 8.9% |

| GPQA Diamond (Science) | 84.0% | 79.7% | 80.2% |

| AIME 2024 (Math) | 92.0% | 61.3% | 83.9% |

| LiveCodeBench v5 (Code Gen) | 70.4% | - | 70.6% |

| SWE-bench Verified (Agentic Coding) | 63.8% | 70.3% | - |

These results highlight Gemini 2.5 Pro's strengths in reasoning and scientific domains while indicating room for improvement in certain coding tasks compared to Claude 3.7 Sonnet.

Improvements Over Gemini 2.0 Pro

Compared to its predecessor, Gemini 2.5 Pro demonstrates substantial gains across multiple benchmarks:

- GPQA Diamond (Science): Increased from 62% to 84%

- Humanity’s Last Exam (Reasoning): Improved from 7.7% to 18.8%

- LiveCodeBench (Coding): Jumped from 47% to 70.4%

- AIME 2024 (Mathematics): Boosted from 72% to 92%

These improvements suggest that Google DeepMind has significantly enhanced the model's reasoning, science, and coding capabilities through refinements in architecture and training data processing.

💡 Learn More about Google Gemini 2.0 Pro

Editor's Comments

With the release of Gemini 2.5 Pro, Google DeepMind continues to push the boundaries of AI capabilities, particularly in reasoning and scientific analysis.

While it surpasses many competitors in these areas, its coding abilities remain competitive rather than revolutionary compared to Anthropic's Claude models.

The model's expanded context window and multimodal capabilities make it a powerful tool for researchers, developers, and businesses handling complex data-driven tasks.

Looking ahead, the anticipated expansion to a 2-million-token context window could further enhance its ability to process vast amounts of information efficiently, potentially setting a new industry standard for large-scale AI applications.

However, its ultimate impact will depend on how well it integrates into enterprise environments via Vertex AI and how its pricing structure evolves for developers seeking scalable solutions.