Google has launched Gemini 2.0 Pro Experimental, its most advanced AI model to date, targeting developers and enterprises with enhanced coding performance, a 2-million-token context window, and integration with tools like Google Search and code execution.

Released alongside the production-ready Gemini 2.0 Flash and cost-efficient 2.0 Flash-Lite, this update marks a strategic push in the AI arms race, emphasizing multimodal reasoning and affordability.

Gemini 2.0 Pro's Capabilities

Unprecedented Context and Coding Prowess

Gemini 2.0 Pro Experimental doubles the context window of its predecessors to 2 million tokens, enabling analysis of datasets equivalent to 2-hour videos, 19-hour audio files, or 2,000-page documents.

This makes it ideal for tasks like generating complex statistical models, solving quantum algorithms, or parsing large codebases.

(Source: Google)

The model outperforms earlier versions in coding benchmarks, with developers praising its ability to handle intricate prompts like "generate a specific program from scratch" or "solve mathematical proofs with step-by-step reasoning".

Tool Integration for Real-World Applications

Unlike competitors like OpenAI's o3-mini, Gemini 2.0 Pro supports native tool use, including Google Search and code execution. This allows it to fetch real-time data, validate answers, and automate workflows—a critical advantage for enterprise applications.

For example, developers can now build AI agents that autonomously execute multi-step tasks, such as generating reports using live data or debugging software with contextual awareness.

Early Access and Iterative Refinement

Currently available in Google AI Studio, Vertex AI, and the Gemini app for Advanced subscribers, the experimental label reflects Google's strategy of rapid iteration based on user feedback.

Early adopters gain priority access to features like Deep Research, which helps analyze complex topics, but must tolerate occasional instability.

Pricing

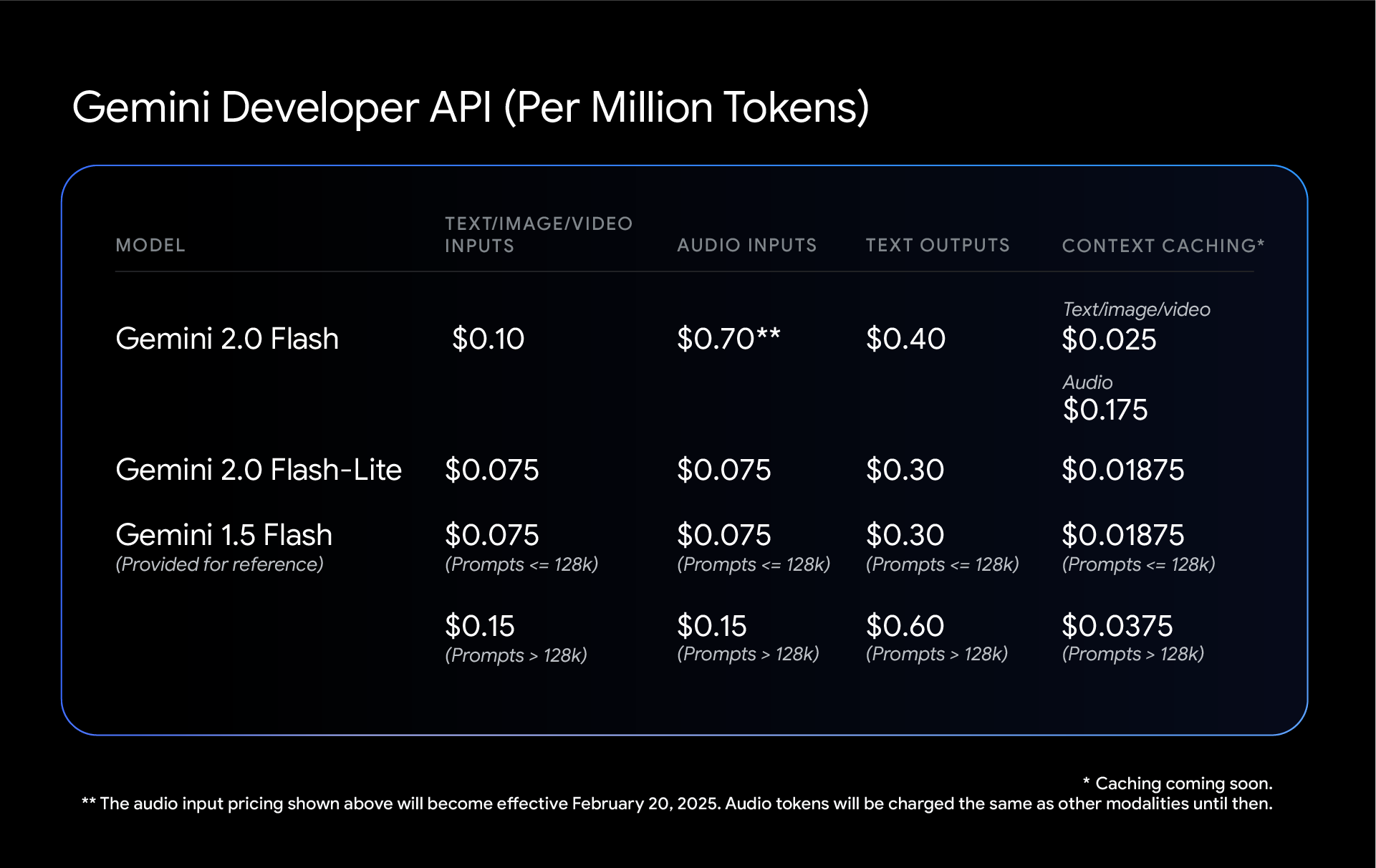

While pricing details for Gemini 2.0 Pro remain undisclosed, its siblings—2.0 Flash (0.10 per million tokens) 0.075 per million tokens)—set a new affordability benchmark.

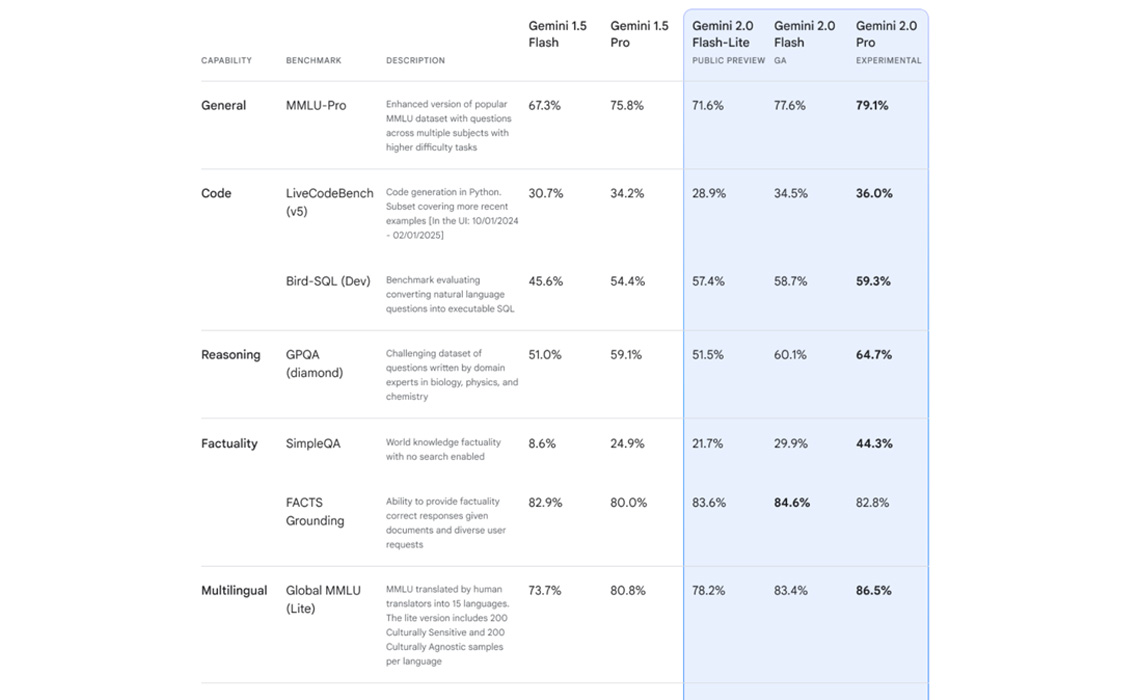

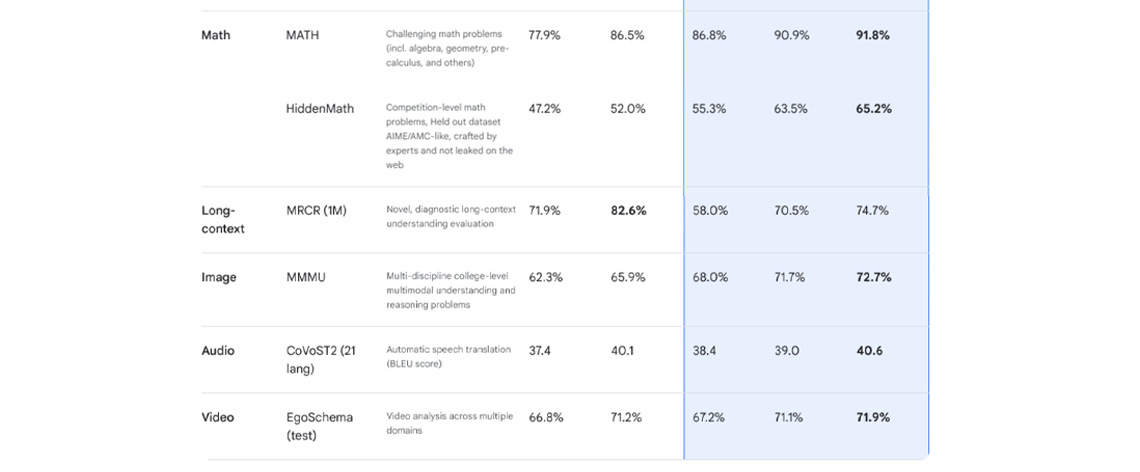

Flash-Lite outperforms Gemini 1.5 Flash on benchmarks like MMLU Pro (77.6% vs. 67.3%) while maintaining lower costs than rivals like Anthropic Claude or OpenAI 4o-mini.

(Source: Google)

Safety, Scalability, and Future Roadmap

Reinforcement Learning for Accuracy

Google has implemented AI-driven reinforcement learning to improve response accuracy. The model critiques its own outputs, refining answers to sensitive prompts and mitigating risks like indirect prompt injection attacks.

Multimodal Expansion on the Horizon

Though currently limited to text output, Google plans to roll out image and audio generation for Gemini 2.0 models in the coming months. This aligns with its multimodal strengths, as competitors like DeepSeek-R1 still rely on text-only analysis for uploaded files.

Editor's Comments

Google's Gemini 2.0 Pro isn't just another LLM—it's a calculated bet on AI agents as the next frontier.

By combining massive context windows with tool integration, it positions itself as a Swiss Army knife for enterprises tackling data-heavy workflows.

However, the "experimental" tag suggests potential development challenges, indicating that early adopters might encounter issues similar to Gemini's image-generation errors.

Expect Google to leverage its ecosystem (Search, Maps, YouTube, etc.) to create vertically integrated AI solutions.

If Gemini 2.0 Pro stabilizes, it could challenge OpenAI's dominance in coding-specific models, especially with its cost-efficient siblings undercutting rivals. But the real test lies in whether developers tolerate its experimental quirks for long-term gains.