Alibaba has unveiled Tongyi DeepResearch, a new large language model designed to compete with leading AI systems such as OpenAI's Deep Research.

The launch marks a significant step in Alibaba's effort to expand its influence in the global AI market while targeting cost-conscious enterprises, research institutions, and advanced productivity users.

What Is Tongyi DeepResearch?

Tongyi DeepResearch, an open-source AI agent developed by Alibaba Cloud's Tongyi Lab, was officially released on September 17, 2025.

Designed as a specialized large language model (LLM), it is optimized for long-horizon, deep information-seeking tasks such as multi-step web research, report synthesis, and complex query resolution.

Unlike consumer-facing chatbots, Tongyi DeepResearch prioritizes research-grade intelligence, technical precision, and cost efficiency. Its positioning indicates a clear focus on professionals working in academia, finance, healthcare, and other data-intensive industries.

The model is built on a Mixture-of-Experts (MoE) architecture with 30.5 billion total parameters, though only 3–3.3 billion are active per token. This design ensures high computational efficiency without compromising performance.

Notably, Alibaba has made the full model, training pipeline, and inference framework publicly available on GitHub and Hugging Face, marking it as the first fully open-source web agent positioned to rival proprietary systems.

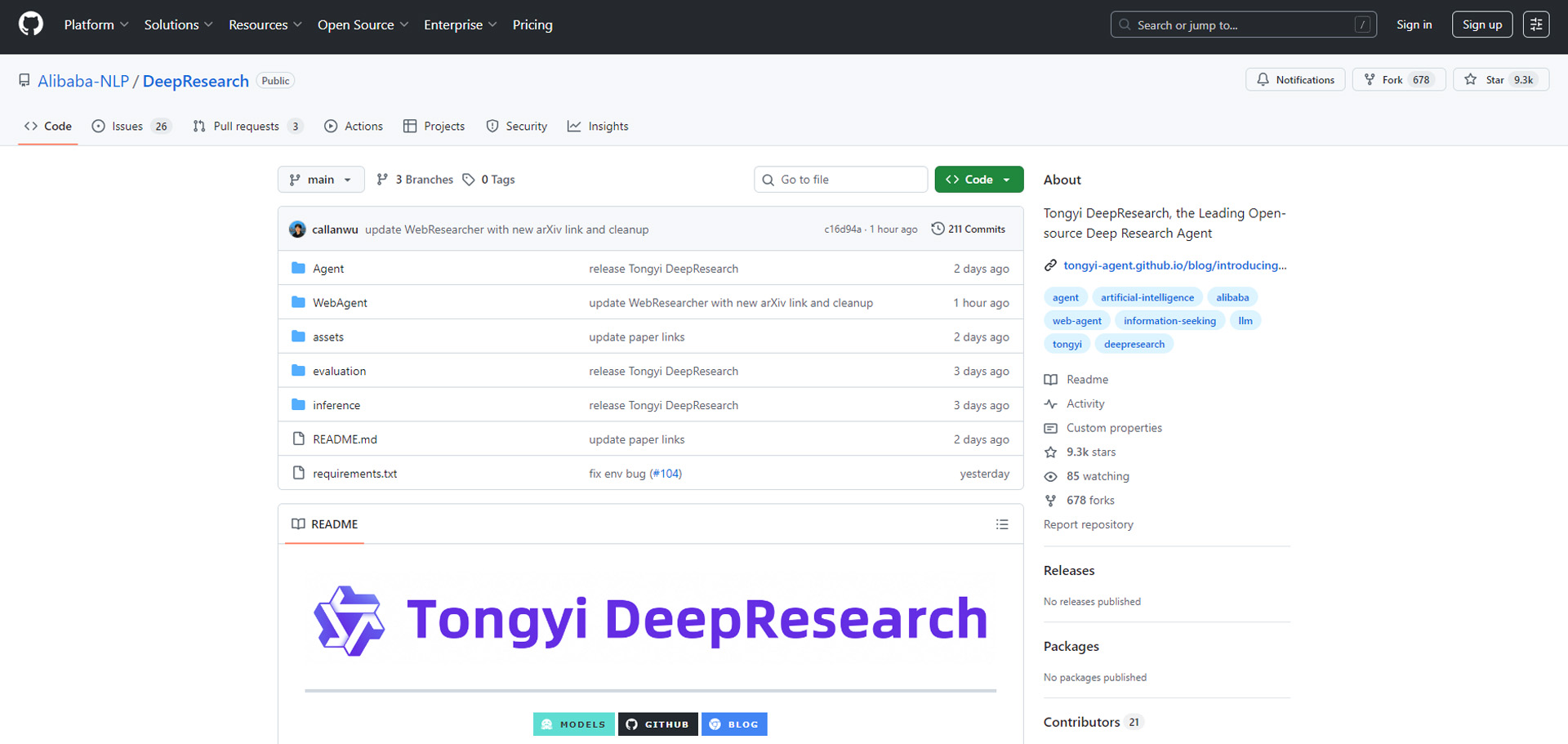

(Alibaba Tongyi DeepResearch Github Page)

Core Features & Capabilities

Intelligence (Benchmarks)

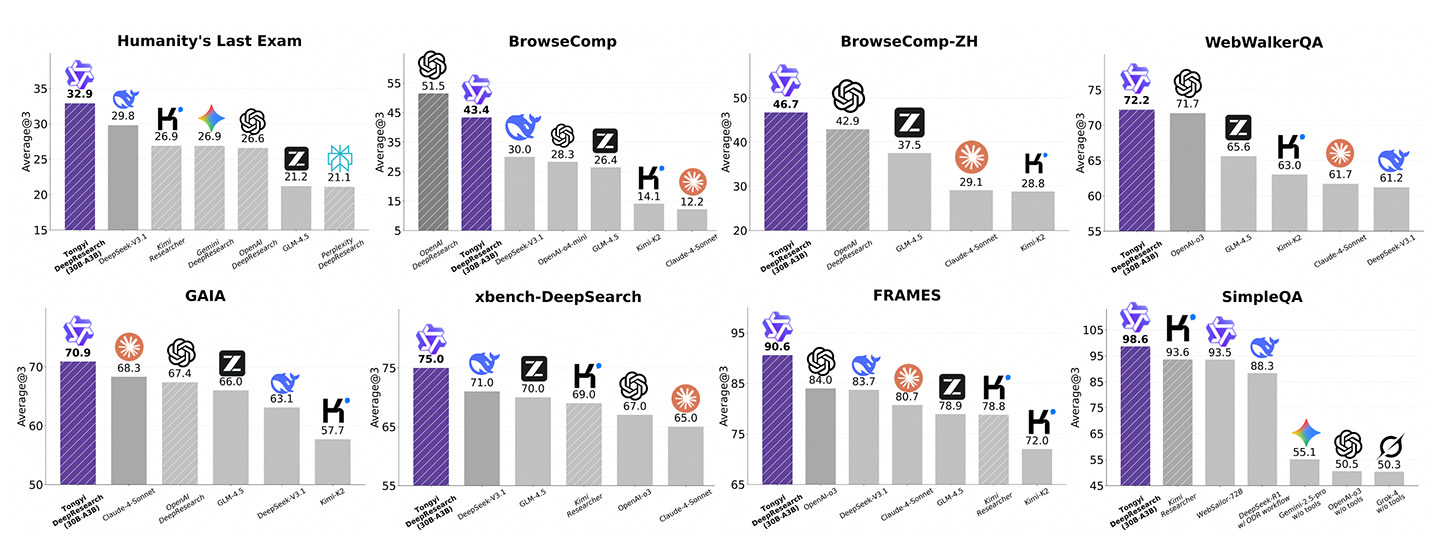

Tongyi DeepResearch achieves state-of-the-art scores on specialized agentic benchmarks.

It leads in xbench-DeepSearch (75.0), BrowseComp-ZH (46.7), and GAIA (70.9), showing particular strength in Chinese-language retrieval and domain-specific reasoning.

On BrowseComp-EN it scores 35.3, outperforming OpenAI by 32% in agentic workflows, though still trailing in general MMLU-style evaluations where proprietary models like OpenAI and Anthropic maintain ~88–90% accuracy.

To sum up, Tongyi's intelligence is tuned for research and factual depth rather than broad consumer tasks.

Cost Efficiency

Tongyi DeepResearch is available free of charge for developers and enterprises because it is released as an open‑source model under the Apache 2.0 license.

This permissive license allows full commercial use, customization, and deployment.

The model can be downloaded and run via Hugging Face, GitHub, or Alibaba's ModelScope platform.

Although the model itself is free, API providers may charge for hosted access.

For example, OpenRouter lists pricing for the Tongyi DeepResearch 30B A3B model at $0.09 per 1M input tokens and $0.45 per 1M output tokens.

This rate positions Tongyi far below the cost of proprietary competitors like Google Gemini or Anthropic Claude, which typically range several dollars per million tokens.

As a result, Tongyi offers one of the most cost‑efficient options for large‑scale, research‑intensive workloads.

Context Window

Tongyi supports a 128,000-token context window, matching GPT-4o but falling short of Gemini's 1–2 million tokens.

While it cannot process ultra-long documents at Gemini's scale, 128K is sufficient for most enterprise-grade research tasks such as literature reviews, regulatory compliance, and large dataset queries.

Speed and Deployment

Built with a mixture-of-experts (MoE) architecture, Tongyi maintains fast throughput with low latency.

Estimated throughput exceeds 100 tokens per second on modest hardware, with time-to-first-token under one second.

Unlike proprietary systems requiring heavy infrastructure, Tongyi's lean design enables deployment on consumer PCs, which enhances accessibility for research groups and small enterprises.

Scale and Accessibility

Tongyi DeepResearch runs on 30.5B total parameters with 3B active per query, far smaller than trillion-parameter models from OpenAI or Google.

Despite its lighter scale, Tongyi delivers near-parity in agent benchmarks.

Most importantly, it is fully open-source on GitHub and Hugging Face, contrasting with the closed APIs of GPT, Gemini, and Claude.

This openness allows developers to build custom research agents without ecosystem lock-in.

Comparison with Key Competitors

(Source: Alibaba Tongyi DeepResearch Github Page)

Benchmark results based on independent evaluations highlight Tongyi DeepResearch's performance relative to OpenAI DeepResearch, Gemini, DeepSeek, Claude, and others:

-

Humanity's Last Exam: Tongyi 32.9, higher than DeepSeek V3.1 (29.8), Gemini DeepResearch (26.9), and OpenAI DeepResearch (26.6).

-

BrowseComp (English): OpenAI DeepResearch leads with 51.5, Tongyi follows at 43.4, above DeepSeek V3.1 (30.0).

-

BrowseComp-ZH (Chinese): Tongyi leads with 46.7, ahead of OpenAI DeepResearch (42.9) and GLM-4.5 (37.5).

-

WebWalkerQA: Tongyi 72.2, slightly higher than OpenAI-03 (71.7), outperforming GLM-4.5 (65.6).

-

GAIA: Tongyi 70.9, ahead of Claude-4-Sonnet (68.3) and OpenAI DeepResearch (67.4).

-

xbench-DeepSearch: Tongyi 75.0, leading over DeepSeek V3.1 (71.0), GLM-4.5 (70.0), and OpenAI-03 (67.0).

-

FRAMES: Tongyi 90.6, far above OpenAI-03 (84.0) and DeepSeek V3.1 (83.7).

-

SimpleQA: Tongyi 98.6, significantly higher than Kimi Researcher (93.6), Websailor-72B (93.5), while Gemini scores 55.1 and OpenAI-03 50.5.

Overall, Tongyi DeepResearch ranked first in seven out of eight benchmark tasks. Its strongest advantages are in Chinese-language understanding, factual retrieval, and simple Q&A.

OpenAI maintains an edge in English browsing, but Gemini lags considerably across research benchmarks.

These results underscore Alibaba's strategy to dominate enterprise and research-heavy applications, especially in multilingual contexts where Western models still show gaps.

Editor's Comments

Alibaba's Tongyi DeepResearch signals a shift in the LLM race—away from closed, consumer chatbots and toward open, research-grade systems.

Its strong benchmark scores, open-source license, and low-cost accessibility make it especially appealing to enterprises and academia.

While it trails in some English browsing tasks, Tongyi's dominance in research benchmarks and multilingual depth positions it as a serious challenger to Western incumbents.